Tuesday, April 15, 2008

Monsters & Studio Reviews

Monads are a tight family, infinitesimal differences in their qualities – no discontinuities allowed – You wanna be a MONAD! - conform, hold hands, share a little of yourself with those you touch – no room for Monsters here, this is blood brothers.

The Studio Review Algorithm

Input:

Result presented by Wannabe Architect

Studio Review Algorithm operation:

1. Take Wannabe Architect’s Result

2. Demean It

3. Select randomly from all remaining Possible Answers; if none remain goto Step 8

4. Instruct Wannabe Architect to rework accordingly by next Review

5. Schedule next Review for 4 days or less

5. Remove Answer from Possible Answer Set

6. If no Answers remain, invite Jury to next Review; declare it the Final Review.

7. Goto Step 1 within 4 days

8. Speak into your cell phone and leave the room

Monday, April 14, 2008

what if/then what

A simple closed curve (one that does not intersect itself) is drawn in a plane. This curve "C" divides the domain into two domains, an inside "A" and an outside "B." Even if the plane and the curve are deformed, these two classes persist and force any curve traversing A to B to cross C, regardless of the deformations.

Within the topological theorem, there are discrete operations concerning discrete elements (in this case, geometrical forms). While a curve may be reducible to a set of points, this operation is contingent on this curve being irreducible. It is essential to this particular function, and therefore it is discrete. While I do not intend to deny the divisibility test for discreteness, I do think this speaks to the nature of discrete in terms of algorithm.

For instance, the if/then statement, as part of a rule set, functions in a similar fashion as the curve in the Jordan Curve Theorem. It acts as a dividing line that creates, or forces the emergence of, two distinct states that are both contingent on the single statement.

Furthermore, the sequence of rules (rule set) can be discrete when the the operation of another algorithm is contingent upon the entirety of this rule set. Von Neumann speaks to this in his discussion of self-reproduction in the General and Logical Theory of Automata.

If we look at the nature of the algorithm through the lens of "topology," does the algorithm change if the order of rules are re-organized to yield different results? Is the Turing machine "topologically" consistent even if the order of operations are varied based on inputs?

While I think the idea of an infinite rule set is logically sound, I think it should be appended to reflect the necessity for recursion in this infinite string. This would suggest genetic instructions akin to evolution, rather than a sprawling sequence that simply doesn't end.

Thursday, April 10, 2008

Lect 13

We want that grammatical grasp of our concepts and the flexibility of thought to see it redefine our architectural ones. On algorithm and geometry our points suggested no internal connection save in a few instances. Algorithm expanded into any notion of operation means geometry is constructed from operations but not defined by them but by geometrical axioms. Geometry as an organization specific to matter is a language by which to characterize it but doesn't describe the process of formation. Topology, on the other hand might be more internally connected. There is always an order to the execution of the steps and they are related to each other in specific fundamental ways.

We were asking what is discrete. Today the elements, objects, entities, etc which remain constant (a variable can be one of these I suppose) upon which the rule set acts. Q: is the space in which a sequence run an "element"? We possibly reduced this with the fruit shopping analogy to an if/then condition. And this might seem on the whole more to do with toplogy. It certainly brings us back to the problem of logic.

On a few definitions:

What is a rule space as opposed to a rule set?

Can there be an infinite rule set?

Can the terms of an algorithm magically change? (Can 0 just become 1)? In contrast to: Can the rules or rule ste change?

Are we looking at anything like Descartes' invention of analytic geometry? (I mean the axiomatization of geometry? The application of algebraic procedures to geometrical terms?)

What is discrete?

1) Are Rule Sets Discrete

2) Are Objects, elements, terms and entities discrete? (That is, those things upon which the rule set acts)

3) Is a step in the carrying out of the rule set discrete?

4) Is the if/then conditional discrete?

One thing to keep in mind is that computation has changed the relation between geometry and toplogy - but this is perhaps a feature of analytical computing.

Another thing to consider is whether algorithm and toplogy are possible more connected than algorithm and geometry.

In other words, algorithm might act on geometrical elements but in order for it to act geometry isn't essential to its function. The if/then and the question of loops and so forth seem to suggest an internal connection with topology.

In terms of continuous, we mean possibly divisible. Do we mean infinitely divisible?

Design Office for Research and Architecture

155 Remsen Street

No. 1

Brooklyn, NY 11201

USA

646-575-2287

info@labdora.com

Friday, April 4, 2008

Wednesday, April 2, 2008

On algorithms and boats

Design Office for Research and Architecture

155 Remsen Street

No. 1

Brooklyn, NY 11201

USA

646-575-2287

info@labdora.com

Tuesday, April 1, 2008

Lect 12

There were also provocative notions on the snowflake: geometry can describe it but can't account for the process by which it formed. And the question also of the operations of the coin toss when the coin is a sphere - the contrast between the discrete and the continuous.

I'll try to say more about this later. To return to chu and delanda, let me just emphasize two things: for chu. Material systems are themselves products of algorithm. Algorithm doesn't derive from dynamical systems. What does this mean? For Delanda, populational, intensive and topological thinking are essential to genetic algorithms. Why does he exclude the discrete? Finally, why in Leibniz are there no such thing as monsters?

Design Office for Research and Architecture

155 Remsen Street

No. 1

Brooklyn, NY 11201

USA

646-575-2287

info@labdora.com

Fw: Summary lect 10

155 Remsen Street

No. 1

Brooklyn, NY 11201

USA

646-575-2287

info@labdora.com

-----Original Message-----

From: "Peter Macapia" <peter.dora@tmo.blackberry.net>

Date: Sun, 30 Mar 2008 08:27:26

To:"Peter Macapia" <petermacapia@labdora.com>

Subject: Summary lect 10

Conflict, Problem, Question

We looked at two problems, one relating to Turing's invention as a thought experiment - how it led to computation and the other about algorithm and geometry. Instead of looking at Turing's problem as a problem we could relate in its entirety to architecture I was asking if we could just understand something of the implications of his use of a binary system, or of the discreteness of something being 1 or 0. And so I was asking in what sense can we think of cases of the discrete. And we looked at mathematical and other types of discrete, integers vs irrational. And then I asked us to consider continuity. Discrete, it seems is the basis of computation, of the algorithm. It is a whole unto itself. Frank suggested at first that there is a problem of representation here, of symbolization. But we soon came to the issue of the fact that the discrete in its essence doesn't really require that. It is a structural and formal property, not one of symbolization.

Design Office for Research and Architecture

155 Remsen Street

No. 1

Brooklyn, NY 11201

USA

646-575-2287

info@labdora.com

Geometry and algorithm

It is invalid to say all algorithms are related to geometry. Because there are algorithms dealing with formless tasks. However, because of the computational nature of algorithmic operations, most of them can always be expressed in geometrical language e.g. plotting graphs with numerical data. Algorithm is artitifical manipulations of matters and systems through systematic undertaking of procedures which are believed to have specific effects in specific context. The primitive idea about algorithm is methods by which people can get things done. In geometrical discussion, algorithm must be involved in creation or alteration of geometry based on the notion of algorithm as methods.

Let’s first try a seating chart:

Let’s assume for a moment that there are two major tendencies in the classroom: talkers and sleepers and that talkers have the ability to excite the students in the seats around them, while sleepers have the ability to dampen talkers into a little lecture nap session. The interactions are limited to the students directly surrounding the one under consideration. That map looks like this:

We can expect to change the outcome of the class by altering the initial condition.

Ok, let’s do some science on these kids. The point Peter made last week is that if we have a discrete set of inputs (512), we can generate a continuous set of outputs (length of class).

Let’s try turning this on its head. Scrap the seating chart. Sleepers and talkers mill about at will and interact as they please. The space of possible initial conditions for this kind of interaction is beyond what my modern computer can compute for beyond 23 students

– in other words it’s virtually continuous. The interactions over the course of class produce a discrete experience that might be somehow captured in numbers. If we can’t even thoroughly describe what might happen first, we certainly cannot say that if sally comes to class first, then it would follow that… We might begin to operate on the class by setting up rules, but the rules can’t be given by the possible combinations of student interactions, again the possible space is too large to quantify. The rules then become behavioral heuristics; such as if Sally isn’t acting cool, then don’t talk to Sally, rather than the possible combinations of black and white tiles. This doesn’t seem far from Game of Life, although I was thinking of more complex simulations, like the racial preference study.

The conclusion I draw from this is that this might be a way to classify these different programs capable of generating complexity. Do all of them have the same organs in different interrelationships?

Relative Discretion, Relative Complexity

To construct one such experiment, take a coin, a nickel as we know it today—Jefferson on one side, his home always his opposite, and inflate it from its centroid to form a perfect sphere. Jefferson and Monticello are deformed equally into two hemispheres connected along their equator by a seam—previously the sides of the original nickel cylinder—now similar to the make-up of a red rubber bouncy ball, less the red, rubber, bouncy. The nickel, once approximately the diameter of a marble, now, in sphere form, closer to the size of a bee-bee; as no alchemy has been performed here. No additional molecules have been added to the nickel in order to bring it to its new shape; both the original and the new nickel are equal in weight, mass, density and the like. The first penny when tossed performs as Bernoulli suspects while the second now rolls until finding a perfectly flat position on the ground and reveals, when viewed orthogonally in plan, most of Jefferson’s ponytail, “Liberty”, the mint date, along with “Unum”, the right third of Montec-E-L-L-O, the word “America” and the smooth shiny seam holding the two hemispheres together. The probability that the nickel will land to reveal exactly the same image again once in the next one-hundred tries is slim at best.

As a system we can understand Bernoulli’s analogy conceptually in architecture and in literature and in most other arts and soft sciences. As a physicist, however, I imagine that both of our nickels are either both discrete and/or both continuous. Both of the nickels are made up of atoms and are probably divisible as such; economically each is still divisible into 5 pennies; what is important, though, is that the difference between one and the other is their distinct formal qualities. Yet taste, smell, listen and other typical analyses all produce the conclusion that each is qualitatively the same. Neither is more or less continuous than the other. Yet Bernoulli, and Lynn, and myself continue to believe in the coin toss analogy. This has to do with the notion of relative discretion. Each system must be able to define, under its own pretense, its own discretion. Under this law a toroid may be considered discrete if it is the primitive geometry from which the system begins. Until acted on by forces; fluid, dynamic, or otherwise, the toroid will remain discrete and may serve as a constant against which all post-operative geometries within the same experiment may be measured. In this way the coffee cup may be considered continuous although the two geometries may contain the same number of vertices, edges, faces, etcetera.

In each of these models an operation is performed on the discrete to produce the continuous.

It would seem also that the notion of relative discretion may pertain to the algorithm itself. We have discussed at length the complexity produced by such simple rule based algorithms as cellular automata and Turing’s universal machine. Specific conversations in class have debated the actual complexity of the cellular automaton. During one particular session the argument was made that in each the CA and the UM, because the rules are stated before the experiment is begun, it would be conceivable that the result of each may be predicted with some certainty. The opposite side of the debate acknowledged that, while indeed the rules are known from the start, the aggregation of these simple rules cannot be predicted and that the only sure way to realize the results would be to run the algorithm. Again, statistically this must be true, although the complexity of the rule set will always have a direct relationship with the odds of predicting the outcome. The coin, for instance, must be tossed in order for us to know the result with 100% certainty. Before the coin is tossed, though, the speculator is given 50% odds. The CA must be predictable to some degree of certainty in the same way.

An analogy can be made to the game of blackjack which comes with its own set of rules. Similar to the rules of a cellular automaton the rules of blackjack are established at the start. These rules are largely Boolean—hit or stand—with some added complexity in split, double, and bust. Given three cards, the two in the speculators hand and the one dealer card shown, a single response is expected. The speculator’s hand includes an ace and a nine while the dealer shows a two—the speculator is expected to stand. The speculator has two eights while the dealer shows a nine—the speculator is expected to double his or her wager and hit. Although the chart would indicate that blackjack is significantly more complex than the coin toss, the pit boss knows that blackjack odds are always 42%. Tipped only slightly in his or her favor. Before each card is dealt the speculator knows what to do with each new circumstance and exactly what those new circumstances might be; the ability to count cards, where he or she is seated at the table, the size of the deck and other advantages may even help to bring these 42% odds closer to the 50% available at the coin toss. As discretion is relative so too is complexity and 42% can hardly be considered complex. This is not to say that complexity is not available in blackjack. It does suggest that the complexity must come from a secondary influence or a second level algorithm. Studying a full black jack table you will notice that the response of a speculator immediately to the right of the dealer will directly impact the game of the speculator to his or her right and so on around the table. It is not uncommon for tempers to flare if one speculator offers a response not specified by the given rule set. Such a response will spike the flow of the next player’s game, resulting in him or her receiving the card before or after the card they would have been dealt had the game been carried out by a computer alone. The possibility for this type of random operator asserts an open-endedness to the system and allows for the unpredictable result.

Similarly, Lynn uses the term incomplete to describe the random section method use by both Le Corbusier and Rem Koolhaas at Maison Citrohan and Tres Grande Bibliotheque, respectively, to produce probable geometries. In "Strategizing the Void" Koolhaas uses the terms regular and irregular.

“Imagine a building consisting of regular and irregular spaces, where the most important parts of the building consist of an absence of building. The regular is the storage, the irregular is the reading rooms, not designed, simply carved out. Could this formulation liberate us from the sad mode of simulating invention?”

Here a discrete system is established by regular floor plates and structural organizations and an algorithm is deployed for networking the system vertically through elevators and escalators. While the system is technically made continuous in this operation alone it is still relatively un-complex. A secondary, random operator, is then run through the system to “excavate where efficient”—thus producing the irregular.

A similar process can also be seen in Tschumi which he describes as Space/Event (Movement) and tests at Parc de la Villete. He includes the term movement but always secondarily and often removes it completely to say Event Space. A more indexical description of his theory would reorder the terms, space-movement-event. Here a discrete system is established with the construction of space. The movement of the user then activates the space to produce the event.

Knowns, Unknowns, and GPS

-Donald Rumsfeld February 12 2002

It can be difficult to make high stress decisions because like Donald Rumsfeld we know there are knowns and unknowns. The algorithm articulates this by reflecting a series of choices like the branches of a tree with each decision starting at the same place but traveling its own path down one of many possible branches. The troubling issue is all the branches you can not travel down which may possible yield better results than the one you traveled. In considering the unknowns vastly outnumber the knowns it also looks to be almost impossible to make a good decision in terms of the statistical possiblies. This tree of possible choices poses a geometry to decision making as defined by it’s relation to the algorithm. Yet this proposes a model of decision making which is incompatible with the linear formation of time which always places one thing before or after another. The realization of how multiple situations could exist at this point of time makes time’s linearity seem tangible as any one of a series of possible outcomes could happen if the process was repeated enough. Time changes from a linear process to a recursive one within this model of understanding and it’s repetition becomes a way of abstracting a new truth or meaning through the algorithm and it’s resulting tree geometry. Time becomes nothing more than another variable with the larger decision process of the algorithm which is some foreign to the human condition.

I think this idea is present in this interview of Paul Virilio with John Armitage :

John Armitage: How do these developments relate to Global Positioning Systems (GPS)? For example, in The Art of the Motor (1995 [1993]), you were very interested in the relationship between globalisation, physical space, and the phenomenon of virtual spaces, positioning, or, 'delocalization'. In what ways, if any, do you think that militarized GPS played a 'delocalizing' role in the war in Kosovo?

Paul Virilio: GPS not only played a large and delocalizing role in the war in Kosovo but is increasingly playing a role in social life. For instance, it was the GPS that directed the planes, the missiles and the bombs to localised targets in Kosovo. But may I remind you that the bombs that were dropped by the B-2 plane on the Chinese embassy ? or at least that is what we were told ? were GPS bombs. And the B-2 flew in from the US. However, GPS are everywhere. They are in cars. They were even in the half-tracks that, initially at least, were going to make the ground invasion in Kosovo possible. Yet, for all the sophistication of GPS, there still remain numerous problems with their use. The most obvious problem in this context is the problem of landmines. For example, when the French troops went into Kosovo they were told that they were going to enter in half-tracks, over the open fields. But their leaders had forgotten about the landmines. And this was a major problem because, these days, landmines are no longer localized. They are launched via tubes and distributed haphazardly over the territory. As a result, one cannot remove them after the war because one cannot find them! And yet the ability to detect such landmines, especially in a global war of movement, is absolutely crucial. Thus, for the US, GPS are a form of sovereignty! It is hardly surprising, then, that the EU has proposed its own GPS in order to be able to localise and to compete with the American GPS. As I have said before, sovereignty no longer resides in the territory itself, but in the control of the territory. And localization is an inherent part of that territorial control. As I pointed out in The Art of the Motor and elsewhere, from now on we need two watches: a wristwatch to tell us what time it is and a GPS watch to tell us what space it is!

http://www.ctheory.net/articles.aspx?id=132 March 31 2008

It seems that both space and time both become variables within some larger series of decisions which determine who is in control? Are their landmines here? and will this place be bombed in five minutes? It also becomes of critical importance in how the algorithm is defined as whatever value system the algorithm identifies becomes the system by which space or geometry, time and their related decisions will be rationalized.

Geometry, Algorithm and Identical Snowflakes

‘’Several factors affect snowflake formation. Temperature, air currents, and humidity all influence shape and size. Dirt and dust particles can get mixed up in the water and affect crystal weight and durability. The dirt particles make the snowflake heavier, and can cause cracks and breaks in the crystal and make it easier to melt. Snowflake formation is a dynamic process. A snowflake may encounter many different environmental conditions, sometimes melting it, sometimes causing growth, always changing its structure’’. Geometry and geometry rules are what we use to describe, ‘dismantle’ the snowflake. If we were to describe the process of its formation, geometry wouldn’t help.

Geometry is what we use to describe its form but geometry has almost nothing to say about the way it is created, and formed. The formation of a snowflake is such a multi-parametric, non-linear process –an algorithm- that no matter how many times it is run, never will two identical snowflakes will be formed. We may consider two snowflakes as identical, maybe because our eyes can read/recognize/identify only major differences in shapes, disregarding the slight differences. But snowflakes are in fact always non-identical as they have varying precise number of water molecules and ‘’spin of electrons, isotope abundance of hydrogen and oxygen’’ [I ‘ve no idea what these are; I found it on a chemistry website].

Monday, March 31, 2008

Relationship between Algorithm and Geometry

Mathematics is the order, structure, logic, relationship and quantification of the language of numbers and symbols.

Geometry is a branch of mathematics - an architecture - relating to points, lines, angles, surfaces, and solids.

How does geometry operate on the properties of its architecture (points, lines, angles, surfaces, and solids)? Operations on geometric objects such as:

- Boolean

- Tessellation

- Merging

- Extending

- Trimming

- Triangulating

- Tweening

- Twinning

- Tracing

- Filling

- Shadowing

- Resizing

- Extruding

- Curve fitting

- Interpolating

- Extrapolating

- Splining

Geometry operates (applies functions) on objects through a rule set – an algorithm.

How does one determine the characteristics (properties of its architecture) of a member of a geometric set? Determination of member characteristics such as:

- Resultant numeric properties (size, location, etc.)

- Comparative characteristics (max, min, difference, ratio, arrangement, etc.)

- Set membership (shape, # of vertices, angle, symmetry, state, topology, any other)

Geometry computes object properties through a rule set – an algorithm.

RELATIONSHIP: Algorithm is the program which operates within the Geometry's architecture.

Algorithm and Geometry

The Cartesian paradigm has lost its influence on current architectural philosophy and the existence of its universal space is redefined as a material field of omnipresent difference. The universe, now a matter field, replaces the unchanging essences of a fixed field with interactive entities. Architecture incorporates speeds rather than movements and therefore becomes “as much matter and structure as it is atmosphere and effects.” (Atlas of Novel Tectonics, Resier + Umemoto, p.23)

Architecture involves assemblages of multiple models, surfaces, and materials, and therefore not continuous anymore but rather composed of several organizational models operating at different scales. Architecture must perform with a series of techniques in order to provide the right balance between material and force.

The notion of hierarchy in the modernist projects is currently used in an innovative way such that the order is not just constrained to the scale or to what lies above or below it. In fact, instead of processing in a top-down order from the general scheme to the specific detail, the new concept of hierarchy allows the particular to influence the universal and reciprocally. Indeed, inventive architectural organizations and effects emerge out of entities or wholes, which cannot be reduced to their parts, and become readable not as elements to a whole but as whole-whole relationships.

Typology is significant in the material practice and allows for a wide variety of architectural organization. When selecting a specific typology, a correlation between a rough typology and a practical or structural criteria is possible. Typology is therefore not just used as a mean of classification at the end of a process but also used in its rough state as a device during the design process. Typology is less a “codification” than it is the source for a method of controlled material expressions.

Geometry used to be thought by the modernist architect as an abstract regulator of the materials of construction and is now perceived as a notion that unites matter and material behaviors. In contrast to the prior concept of geometry as a regulator of the irrational or accidental state of matter, the latest theory “must be understood not as a supercession of measuring but as the interplay between intensive and extensive differences.” (Atlas of Novel Tectonics, Resier + Umemoto, p.74)

David Deutsch explains that quantum mechanics should not as a predictive tool but as an justification for how the world works. If we are to take quantum theory at its true value, we must come to the conclusion that our universe is “one of many in an ensemble of parallel universes” that physicists frequently call the “multiverse.” Deutsch believes that the photons in the two-slit experiment are prevented from falling on some parts of the film because they are being obstructed by invisible ''shadow'' photons from a parallel universe.

The “multiverse” version of quantum theory is one of the most developed theories od Deutsch along with theory of computation: the idea, developed by the mathematicians Alan Turing, Alonzo Church and others, that all material procedures can be simulated on a computer. Also essential is the theory of evolution and an “epistemology” (theory of knowledge) that takes science not as a human build but as an ever-improving diagram of the world.

Algorithms and geometry

Algorithms and geometry share an intimate relationship. Algorithms may operate on geometry (real or virtual) and algorithms may operate on other algorithms. They almost always come down to operating on geometry. Geometry on the other hand is created by algorithm. Or was that the other way around? It is the old chicken and egg dilemma; did whatever material contained in whatever blew up in the Big Bang (arguably the originator of all geometry) have an algorithm or a set of conditions as to when it was to explode? Or was there one miraculous appearance of original geometry and then all other geometry (i.e. everything) behaves according to an algorithm? And if this is the case what governs this algorithm who got to write it?

Geometry and algorithm cannot be separated it appears because without rules nothing would work. (Like the anvil that you try to drop on the roadrunner but it was just a second too late, without rules the anvil might have dropped faster than it should have and then it would have killed him and then we wouldn’t have any more coyote vs. roadrunner cartoons.)

Also without rules there would be no interesting opportunities or exciting leaps new level to the rules is discovered.

Researching Lost and Perceptible/Imperceptible Time

But physical and mathematical research has resulted in theories which are averse to such unification. Since Max Planck kicked off the quantum age by postulating that atoms could “absorb or emit energy only in discrete amounts,” physicists have struggled to answer how one escapes continuous motion to merely explain how you get from particle A to particle B. Modern quantum theory describes a world where discrete values are assigned to observable quantities, but despite this, motion and change are continuous (Deutsch, 1). Upset by the narrow focus in which most particle physicists propose a ‘theory of everything’ and, “because the fabric of reality does not consist of only reductionist ingredients such as space, time and subatomic particles, but also for example, of life, thought, and computation,” David Deutsch has argued for a Theory of Everything with a wider scope (Deutsch 30). Epistemologically, considering Reality as a malleable fabric allows for the overlay of quantum theories, computational theories and cognitive science; all of which have roots based in the disjunction between discrete and continuous.

In the first book of his epic A la Recherche du Temps Perdu: A Cote de Chez Swann, Marcel Proust explains the discrepancy between the world in which we live, the world which exists and the role in which memory plays in a quantization of time and continuity of memory. He describes 2 different walks his family would take from their house in Combray: the Geurmantes way and the Meseglise way. Both distinctly different in the flora and fauna one would encounter as well as the time each walk would take and the repercussions which would ripple into the evening; determining whether or at which time he might receive his bedtime kiss from his mother. For Proust, these routes, “linked with many of the little incidents of the life which, of all the various lives we lead concurrently, is the most episodic, the most full of vicissitudes; the life of the mind.” (Proust 258) As specific ephemeral qualities would be realized within his perceptibility so too they would be realized as physical veracities. These interweave to create an intricate network of time and space which was no truer without the knowledge of its physical traits as it was with them; the network creating a conception which depended on the existence of all possibilities:

All these memories, superimposed upon one another, now formed a single mass, but had not so far coalesced that I could not discern between them – between my oldest, my instinctive memories, and those others, inspired more recently by a taste or “perfume”, and finally those which were actually the memories of another person from whom I had acquired them at second hand – if not real fissures, real geological faults, at least that veining, that variegation of coloring, which in certain rocks, in certain blocks of marble, points to differences of origin, age, and formation.

Because the majority of information we receive cognitively is incomplete, perception becomes a process of inference and the relegation of probabilities. When we receive insufficient information our cognitive processes provide it themselves, and are typically very good at it (Levitin, 99). This was developed as an evolutionary trait, but it now has become manifest within a deeper observation in epistemological lineage and our understanding of the universe. Cognitive science allows for a link between our measurable or quantized realities, and those which are continuous. Memory and perception begin to broach the link where singularities combine to form a whole greater than the addition of their individual quantities, but which are dualistically perceptible as quantifiably individual and intrinsically continuous within a complete whole.

Sunday, March 30, 2008

Discrete or Continuous?

Both the definition and implications of continuous include “uninterrupted”, “extent” and “undivided”, yet it is deemed to be sub-dividable; a sequence consisting of moments or events that are attached. In other words, the continuous is reducible to a series of the discrete, albeit functioning or perceived as analog.

The machine which interprets the discrete as continuous is the human brain with the qualia (phenomenal qualities) of its consciousness.

One could conclude that what we experience as continuous is either a fine series of the discrete or a continuity that is algorithmically processed by the brain in discrete steps. As such, we cannot discern the truly continuous. Having said that does not minimize its importance as we live in a continuous world. It only serves to emphasize that it is our analog experiential relationship with the surroundings that most significantly determines the true quality of human life; it should be provided by our architecture. It is true that a discrete moment, discrete image or singularity – the digital input – can provide a powerful sensory experience permanently impregnated and forever recalled; but the discrete is a monumental moment. It does not represent the continuum of quotidian. It is the discrete view of architecture from afar, not the continuous experience of architecture up close.

Saturday, March 29, 2008

life and the discrete

Is there an absolute zero ? Kelvin thinks so. We haven't gotten there yet, and anyhow is it possible to mechanically, or electronically, transmit anything where absolutely nothing can happen ? I don't know. But there is no disagreeing with Kelvin on absolute zero. It's mathematically proven -- that must mean it's right, no?

Look, math is the ideal. It needs discrete values, or else it's just a mess. We take from math so readily, perhaps because in a, albeit troped, Darwinistic model of estimated self-interest, the idea of the division of resources -- of the group versus the individual -- or vice versa, is so fundamental to survival.

So the idea of the discrete preceeds our rational understanding of what discrete is. There is one of something. Now there are two of something. RNA, for instance, works on this principle.

The RNA does not know what discrete is -- it merely knows how to act upon its occurring. And math allows us to document as a form of a statement its occurance.

Through the wonderful manipulation of energy all around, life has been able to evolve without cognitively knowing the difference between one and two, or nothingness and existence. Yes, mate selection, the instinct to fight or flee, a horde of aphids eating not one, but two lettuces on a given summers day -- all of these things do not require the comprehension of the discrete -- they just act on its existence. And with math we have been able to discuss concretely about the matter.

Monday, March 10, 2008

Literature as Diagram

I turned out the light and went into my bedroom, out of the gasoline but I could still smell it. I stood at the window the curtains moved slow out of the darkness touching my face like some breathing asleep, breathing slow into the darkness again, leaving the touch. After they had gone upstairs Mother laid back in her chair, the camphor handkerchief to her mouth. Father hadn't moved he still sat beside her holding her hand the billowing hammering away like no place for it in silence When I was little there was a picture in one of our books, a dark place into which a single weak ray of light came slanting upon two faces lifted out of the shadow. You know what I do if I were King? she never was a queen or a fairy she was always a king or a giant or a general I'd break that place open and drag them out and I'd whip them good It was torn out, jagged out. I was glad. I'd have to turn back to it until the dungeon was Mother herself she and Father upwards into weak light holding hands and us lost somewhere below even them without even a ray of light. Then the honeysuckle got into it. As soon as I turned off the light and tried to go to sleep it would begin to come into the room in waves building and building up until I would have to pant to get any air at all out of it until I will have to get up and feel my way like when I was a little boy hands can see touching in the mind shaping unseeing door Door now nothing hands can see My nose could see gasoline, the vest on the table, the door. The corridor was still empty of all the feet in sad generations seeking water. yet the eyes unseeing clentched like teeth not disbelieving doubting even the absence of pain shin ankle knee the long invisible flowing of the stair-railing where a misstep in the darkness filled with sleeping Mother Father Caddy Jason Maury door I am not afraid only Mother Father Caddy Jason Maury getting so far ahead sleeping I will sleep fast when I Door door Door It was empty too, the pipes, the porcelain, the stained quiet walls, the throne of contemplation. I had forgotten the glass, but I could hands can see cooling fingers invisible swan-throat where less than Moses rod the glass touch tentative not to drumming lean cool throat drumming cooling the metal the glass full overfull cooling the glass the fingers flushing sleep leaving the taste of dampened sleep in the long silence of the throat I returned up the corridor, waking the lost feet in whispering battalions in the silence, into the gasoline, the watch telling its furious lie on the dark table. A quarter hour yet. And then I'll not be. The peacefullest words. Peaceful words. Non fui. Sum. Fui. Non sum. Somewhere I heard bells once. Mississippi or Massachusetts. I was. I am not. Massachusetts or Mississippi. Shreve has a bottle in his trunk. Aren't you even going to open it Mr and Mrs Jason Richmond Compson announce the Three times. Days. Aren't you going to even open it marriage of their daughter Candace that liquor teaches you to confuse the means with the end. I am. Drink. I was not. Let us sell Benjy's pasture so that Quentin may go to Harvard and I may knock my bones together and together. I will be dead in. Was it one year Caddy said. Shreve has a bottle in his trunk. Sir I will not need Shreeve's I have sold Benjy's pasture and I can be dead in Harvard Caddy said in the caverns and the grottoes of the sea tumbling peacefully to the wavering tides because Harvard is such a fine sound forty acres is no high price for a fine sound. A fine dead sound we will swap Benjy's pasture for a fine dead sound. It will last him a long time because he cannot hear it unless he can smell it as soon as he came in the door he began to cry I thought all the time it was just one of the those town squirts that Father was always teasing her about until. I didn't notice him any more than any other stranger drummer or what thought they were army shirts until all of a sudden I knew he wasn't thinking of me at all as a potential source of harm, but was thinking of her when he looked at me was looking at me through her like through a piece of coloured glass why must you meddle with me dont you know it wont do any good I thought you'd have left that for Mother and Jason.

Thursday, February 28, 2008

Lecture 7

For the texts I wanted you to get a different sense of the background of the algorithm -- light reading from Berlinksy, but it will help us loosen our heads about this.

As for the Deleuze reading on Foucault, I thought it would be useful to have a formal description of the "diagram."

Hopefully we can see what is so strange about the algorithm from Berlinksy'spoint of view.

Btw as a summary of Tues, what I wanted to get at was as many possibly different ways of looking at the "analogy" between computation and architecture. Many offered up points about computation and CA (the output) and architecture. Others looked at a methodological relation.

I think this is interesting because I think I specified a Turing machine, but I didn't specify what I meant by Architecture. To the extent that many questioned the diagrammatic aspects of CA, it was important to linger on a bit about that. What I liked most was the question of whether we could read the diagram in the rule set. This is really important. The fact that Durand had already in a way done much of this ought to show us how we might be able to avoid metaphors from other fields by which we return computation to a diagram. Matt's point that the child's game I mentioned is not in architecture is perfectly right. So the point was after reading the discussions posted on the blogs, maybe we could just practice an analogy with something more basic, more innocent and avoid momentarily too much pressure with architecture. I think that was asking a lot. But I think it was the right question. The Berlinksy reading might provoke us to think a bit differently about this.

Tuesday, February 26, 2008

CA = TM = RS

- the processor is a generic machine.

- the monitor is a means of display (tape, grid, could be other).

Hardware is only the means.

Software = RS (rule set)

Syllogism:

1) TM is RS

2) CA is RS

3) CA is TM

Perception

What for us is a picture, may for another -- say a blind person -- be meaningless. The CA approach to documenting and calculating variables within a reality allows for vibrancy in the way this information transposes from what is happening to an understanding of what is happening.

The flexibility -- and deceptively basic simplicity -- of this transposition naturally disposes it(CA) to be adapted to projective exercises such as art, design, and architecture.

Relegating to the Pictorial

02.26.08 email questions

I think it would be difficult to find an equivalent of a Turing machine in architecture. While both processes have a physical output the way they get to that point seems to be different. In a Turing machine there is an established set of limited states and values by which the entire system operates to produce physical output on the tape, when this tape is analyzed it can be seen as the pure result of the procedure of the machine. It is not always evident in architecture how the “result” and “procedure”, and in many cases the procedure acted out is not one of a limited amount of states or values but one of flux where new unanticipated obstacles are implemented. Where as the turning machine runs a procedure without being effect by anything outside the machine architecture, at times has to absorb these outside influences during the course of a procedure.

The best equivalent to a Turing machine I can see in architecture is the high rise building as the construction and space is produced repetitively up the entire building, each one the same. When occupied these similar cells or spaces will adapt to various functional needs, in a scenario where these functional needs can be seen as analogous to the states of the turning machine the variation could be the result of such a producer playing out.

And in what sense do cellular automata "picture" a state of affairs?

The “picture” created by cellular automata is a result of the rule set it follows. This demonstrates a state of affairs in that the rule set acts upon the line previous to it. Like a precedent study or a virgin site the new line is an advancement forward, some type of improvement upon the next, based on the rule set. In this way cellular automata can illustrate a model of simplification which can help us visualize this process.

False choice.

We can abstract the output of a CA by agreeing on a convention and call this a diagram. A similar scheme on a universal CA, Turing Machine, or GOL simulation would net a visual appearance nearly as verbose as the original, and therefore would not be terribly diagrammatic. There’s no “meta” message to be pulled out of these systems other than the rule sets.

Nor can I gain much traction in describing even Lindenmayer systems as pictorial. They speak about topological relationships of the plant’s branching, but rarely fool me for a second as to their origin. Additionally, there is some fancy, and I mean _FANCY_, math on the back end to get {{a->a},{b->a,b}} to look like my houseplant. As for CA, come on… It’s just not a picture. It’s not representing anything beyond the information itself.

I feel like the pictorial trap is precisely where so many have gone wrong. These systems provide a rich test bed for theories of morphogenesis, social systems and a dizzying array of other phenomena, but it is often forgotten that these are models of phenomena and not the phenomena itself. The substitution of a model for its phenomena is endemic, and is demonstrated with whip cream on top the philosophy of Nick Bostrom, who believes we are living in a simulation. WTF?! A group of mathematicians has placed the probability at 1 in 20. No joke.

Perhaps this can feed into the other question on the table:

Computation between Geometry and Topology

On the one hand, these systems excite me to a degree that I’m uncomfortable relegating them to a prepositional phrase, yet they’ve enjoyed a grammatical identity in the hands of Noam Chomsky in his research into linguistics. In the interest of completely dorking out this evening I re-checked his Wikipedia entry, and, lo, he’s got this automata theory of formal languages. I read about this a while back and quite honestly couldn’t make any sense of it, but I would guess that Peter’s four food groups would have a place on Chomsky’s table. Turing machines are mentioned by name.

In his “Computational Theory of Morphogenesis”, Przemyslaw Prusinkiewicz, draws the axes of his consideration along three lines.

- One or many

- Computing capability (a finite automaton or an automaton with counters)

- Information exchange with the environment

The last of these certainly involves communication, or this kind of go-between again. In modern agent based programing, the geometry takes the role of the discrete unit, but it seems like a computational grammar serves the role of the information exchange. (Or was that topology). I'm not positive these mix and I need to think about this. More later.

State of Affairs of the cellular automata

Interact or React

The same could be said for the building process or any assembly of components – whether it be a pattern of bricks or steel structure. None the less, the more interesting proposition concerns intuitive or artful generation of form; is this an unconscious programmed function, perhaps programmed by human experience? In such case, the architect is a TM.

Perhaps there is an analogy to the empirical; reality based in that it is derived from experience or experiment and not derived from theory. Maybe it is the empirical architect who is the TM.

errata: she is always and never the same

#1: Mechanics of The Game of Life

#1: Mechanics of The Game of LifeNot having read the Complexificaiton reading last week, I didn’t know that the Schelling’s racial housing preference model was outlined there. Variants on game of life (herein, GOL) models are extremely interesting. The mechanics are barely touched on in the chapter from Casti. The reading is a great overview of the systems but doesn’t serve to differentiate between traditional automata and those which expand on GOL (or substitution systems for that matter).

In GOL, the problem associated with applying an arbitrary word-wrap to output is still on the table, but mitigated by the fact that both the rules and the game board for assume a 2D space. There must have been something in the air in the 1940’s when Mr. Conway was dreaming this up. Scan line television sets had been commercially available for a only a few years, and a patent for a “Cathode Ray Tube Amusement Device”, a precursor to the first videogame, was issued in 1947. GOL gives the appearance of cohesive objects that update in a way that suggests motion, but they are merely tags that are flipped based on evaluations of the surrounding tags. The updating scans just like an old oscilloscope: infinitely but over and over the same field. This is distinct from Turring's machine or Uslam's automata that run over a conceptually infinite tape or column. This introduces a meaningful notion of a neighborhood, where tags update based on the properties of neighboring cells, not just on the history of the simulation. Also of note, is the fact that motion can move "upstream" (kind of), during successive refreshes, although the tendency for glider guns to move down and to the right can be considered an artifact of the scanning.

Casti mentions Schelling’s research from 1971, but even as recently as 1996 a serious study by Epstein and Axtell, affiliated with the Santa Fe Institute, used a variation on GOL to test models of social behavior. Models of social phenomena have actually become much more sophisticated. The Sugarscape model, a customization of GOL represents an individualistic polemic underscored by a Brookings Institution imprint. This is not to say that GOL is wrong, just that it isn't great for modeling society unless you believe that simple rules governing individual behavior give rise larger social phenomena, but do not feed back to the level of the individual...

above: a simulation from Epstein & Axtell's Sugarscape

See: Growing Artificial Societies: Social Science from the Bottom Up

http://books.google.com/books?id=8sXENe8QrmYC&dq=growing+artificial+societies&pg=PP1&ots=HLDOY56HRF&sig=3ECV5_fN9JDaHq1Pti__frNtP94&hl=en

R. Keith Sawyer’s Social Emergence does a fantastic job of surveying the research done in this field so far and situating this within classic problems of sociology.

http://books.google.com/books?id=Hgs007Rd_moC&printsec=frontcover&dq=Social+Emergence&ei=VqrDR_j4BYjcygS-gKGsCA&sig=n71iYvGiMj03Di9JonkOKzRboEk

erratum #2: What’s that in Mr. Wolfram’s pocket?

I thought that the shell question related to Steven’s discussion of allometric scaling of seashells in NKS, but realized the shell he keeps in his pocket is probably a tapestry coneshell, a species that happens to have CA-like patterning on it.

This is not B.S. There are plenty of real-life phenomena that behave just like CA.

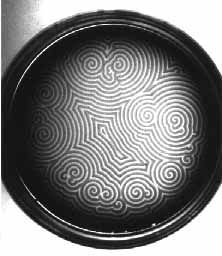

Above is a chemical reaction in a petri dish known as the Belousov-Zhabotinsky reaction.

Monday, February 25, 2008

And in what sense do cellular automata "picture" a state of affairs?

A Turing Machine in Architecture?

A Turing Machine == a program. Not machine mechanically speaking - hardware - but machine in the abstract sense - software. These 'machines' takes any set of data or inputs (any data set), reads it (if it can) and spits out whatever its supposed to. A program defines what can be read.

What these rules do is basically independent of the circumstances in which they will play out. By circumstances I mean where or when or on what they will be acting on, e.g. the data can be anything as long as it is readable by the rule set. A program has a certain language, and if it encounters 'words' outside of its specific language structure the program will stop functioning. So at the onset, if a program is to be used, the language it uses must be clear of unreadable data. Cellular Automata are a way of picturing such defined arrays of stuff.

Cellular Automata are a way of picturing how rules effect a field of machines over time. One frame represents how the program has reorganized objects' transformable attributes or variables based on their relation to their immediate surroundings. The states of each individual unit in the array have certain possible states, states being the current array of variables.

Objects with series of affectable attributes are placed in a field as an initial condition and all are embedded with a program in order to read and to act. Beforehand the patterns of attributes to be acted on and their corresponding actions are defined. The program begins and if the program see's a certain pattern of variable states within the cell's vision it performs a pre-defined action which reconfigures that pattern.

It is interesting to think how a program "sees". What is it looking at and in what order? Either way it must be systematic.

By running a Cellular Automata, pictures are formed of possible states of the entire system as a whole, while the governing process only happens locally within the space. Certain local states are predicted so as to act upon them, but equilibriums or periodic patterns or chaotic systems can emerge within the whole even though the cells are acting independantly. Cellular Automata seen as a Video, or stills placed in sequence, allows us to see animate behavior. Not necessarily of physical of objects, but the data controlling them.

Is it possible to account for any type of data in a computational Architecture? Programs can be written to accommodate knowable and definable inputs, the most obvious being loads, specifically dead loads, in a structure.

Engineering could be a type of software which reads our often rediculous plans(or more recently parametric models) to spit out revised drawings. The rule-set is based on the need to resist load. A field could be forces in space which must be transfered to a surface (the ground)

Strictly speaking, Structural engineering at this point in time is not a form of computation. It is top down, it does not emerge meaning the rules are applied to a completed form , the rules themselves do not necessarily generate form. A sort of engineering could take place in design if 'rules for maintaining stability' were integrated and let run on a set of building parts.

It's interesting to note that two different data sets can only shoot out the same answer if the program(machine) is different. take the data set [1,1] the program 'add()' spits out two. The same program on a different data set, say [2,1], gives three. but you can get the same answer if you change the program to 'multiply()'.

simulation vs. representation

As it applies to cellular automata, the "generated" diagrams are necessarily a construct -- an interpretation -- of the rule set. As stated in Casti's text, referring to an L-System rule set, "This still doesn't look much like a plant. But we can convert strings of this type into a treelike structure by treating the symbols 0 and 1 as line segments while regarding the left and right brackets as branch points." While this method of representation makes much sense and yields unexpected results (in terms of patterning, for instance) another representation could likely yield similarly unexpected, if not more radical, results. This method inherently introduces a geometrical aspect to the CA process that would not exist without diagrammatic intervention. Therefore, this is not a simulation of the code-events but rather a description of its relationships. L-System equations attempt to describe plant growth, and this is only apparent through a diagram, which is drawn to mimic a tree. The diagram could just as easily mimic coral growth, veins of gold, or ice formation, depending on the geometry used to illustrate the system. Nevertheless, any geometry allows this code-event to be introduced into the spatial-material world, which is invaluable for understanding its process. That is, after all, the inherent purpose of a diagram.

As far as pictorial representations are concerned, these generally describe actual objects and actual events in terms of their likenesses. What you see is what you get. As a method of description, it is clearly successful at describing relationships. And while its representation might be skewed by individual perception, it is generally accepted that this is a simulation of real events rather than an abstract interpretation. It is a recounting rather than a birth. It is a snapshot rather than a register. And as such it is limited by the same constraints as the actual objects in terms of space and time.

As it pertains to our ongoing discussions regarding contemporary architectural practice, it seems that the reference -- both the diagram and the pictorial representation -- is somewhat troubling as they are often overly literal. The role of computation exists in a self-reflexive state: generator, recorder, and interpreter. While certainly multifaceted, is this a limited approach to computation as a creative agent?

Monday, February 18, 2008

What does Turing offer?

Sunday, February 17, 2008

Week 6: two kinds of algorithm

Casti's book is very good at explaining basic features of Turing's machine, cellular automata, L-systems, and game of life. there are the four basic food groups. they are an extended discussion of what we see in Rocker.

However, i want you to consider in what sense and why they are related to issues of emergence and complexity.

Now, so here we are. We looked at Geometry and Topology as models of meaning. We also looked at what Topology adds (and what is also problematic about its use) to the issues of archtiecture's use of geometry.

What interests me here is what does computation/emergence/complexity add? I don't think we're far enough into the semester to really say, but i'd like to continually give it a shot.

On the two algorithms. The other texts and the websites i've asked you to look at expand on the problem of the algorithm in Leibniz. The one, the algorithm is used in calculus for calculating the rate of change - -so its an expedient. The other, is a system of thinking -- and communicating. And so here we are again back at the issue of the syllogism in a way.

Finally, i want us to look again at Durand and see just how interesting it is to note what is happening in his Precis. these are lectures and material for engineering students that are now being trained as architects -- so there is a pedagogical component. But Hernandez makes it clear that he is underlining an incredible transformation in method.

I don't think we can call it computational, but i think we can call it protocomputational and i'll say why during class. For now, just note the issue of a kind of combinatorial logic.

Tuesday, February 12, 2008

Week 5: Syllogism and algorithm

The other thing i discussed was the question of a model of meaning -- again. And i will continue to discuss it throughout. I remarked on the consistency of the topological model of meaning in Lynn, Eisenman, Ben van Berkel, and others. The point was: just as the sphere represented a geometrical ideality in Boullee, the manifold or the intensive surface consitutes a figure of topological thinking. . . . but one that seems reducible to an image of that figure. It is not entirely so in all cases -- nonetheless Cecil's use of Seifert surfaces for the UN Studio Arnheim transfer station, their topological diagrams as well as the global topological image, FOA's folding and unfolding bands, Eisenman's folds: all these seem to project a figure that is the pictorial geometrical object of topology or catastrophe.

What was different between this and a straightforward platonic or Euclidean geoemtrical model is that the mathematics in the first case point to an ontology of events rather than things. This was the silly demonstration with the water bottle. I'm just glad the cap was on.

In other words, i was trying to explain how the model of meaning shifted from thing to event. Again, the references are to calculus (first mathematics to quantify events), topology (thinking space, form, and continuity in terms that aren't possible with geometry), and catastrophe theory (which is a hybrid of calculus and topology). (Keep in mind that one of Thom's basic questions is really about modelling phenomena -- but specifically, how to quantify qualitative transformations, like phase change.)

With the subject of this afternoon we looked at Woolfram and his description of cellular automata and i wanted to get a basic feeling for what this idea consists of. But one of the main points in that discussion is of course the interest in complexity and emergent behavior. There is and will be a lot more to say about this. One point is that historically it has been impossible for philosophy to deal with this problem. In Metaphysics Aristotle calls this the accidental, for which he says there can be "no science." Cleary Wolfram is arguing that indeed this simple functions and our ability to see their operations points to a new kind of science. But as Matt pointed out, we have to let the programs run.

Ok, this also brings to discussion the Turing text -- one of the points i wanted to make here is that the very notion that this was a thought experiment about the decidability of any statement about numbers is such an incredible fucking thing. it isn't a computer, it is the idea of this process which hardly even seems mathematical. Again, the cross between logic and mathematics here seems important. Both Leibniz and later Frege were interested in this idea. If there is one thing that I am interested in it is not just the application of the results of computation for architecture -- ie the spinoff results of Wolfram et al, but rather the thought experiment itself. That is why i was asking for an analogy. I wanted to get at that in architecture. Maybe, though I'm not sure, that would mean that we look at architecture kind of like the Entscheidungsproblem in math. hmmm

The Rocker discussion was really about the problem of taking computation, cellular automata, and the other stuff in such a literal kind of mapping way. The spatialization of that information is neither a diagram nor an internal geometrical operation to the geometry of the images she is showing. Rather, it seems, it is a pictorial mapping -- which can certainly become something else, and so there is nothing intrinsically wrong with, but it seems to be a kind of red herring.

This is why i also pointed out in Wolfram's images, the strange idea that if we lay the series one after another of each iteration then we get the image of complexity and it seems that it isn't part of the computational function or a rule in the cellular automata that you take the first iteration and the below show the second and so on. When we see the image of the shell that Wolfram carries with him, we feel as though we have a complete correspondence between computation or cellular automata and Nature. hmmm

Cellular Automata

Although the Cellular Automata hasn't gotten sophisticated enough to adjust the rules (that govern its 'behavior') by itself through learning, the interesting idea demonstrated in this simple program is how complexity grows over time when it's allowed for considerable number of cycles(steps). For complexity to emerge in cellular automata, the previous step (the completed row of cells filled with either black or white) has to provide the 'environment' with certain degree of randomness that stimulates the new step to respond.

How well the new steps can create the complexity of the pattern within the rigid orthogonal grid would depend on the characteristics of rules which can prescribe either increasing (by diversification) or reducing (by generalization) complexity.

Indeed, Cellular Automata can operate without the visual representation in the form of the grid. However, its geometrical aspects can only be seen if the data is presented(interpreted) in graphical ways. The reason why I think it is an interpretation is that the pattern in its original form (algorithm) can actually been understood as completely different thing if one tries to print out the numerical operations and compares it with the grid representation. Most importantly, the predetermined geometry of the cells (squares on 2D grid) frames our understanding to the cellular automata to orthogonal system only. I wonder what would happen to the visual quality if vertical lines in the grid become diagonal and the cellular operation transforms from 2D to 3D.

scales

Cellular automata displays a series of scales, the more infinite it becomes the more variables are added. As new states are added the automata becomes more complex.

The most basic gives 2 states per cell, and a cell’s neighbors defined to be the adjacent cells on either side of it. A cell and its 2 neighbors form a neighborhood of 3 cells. So there would be 2^3=8 possible patterns for a neighborhood.

With each rule new generations are created

What would happen if it weren’t based on a grid system?

Automata-Turing-Cellular: On/Off

All circular functions diminish until they lose symmetry - at which time they either reach the tripping point or create one.

Cellular Automata’s geometrical aspects:

questions from email - Turing machine/Cellular Automata

Any device which can perform a simile rule set would seem to be an analogy for a Turing’s machine. Computer programs are particularly close because they illustrate a series of operations which are dependent on a group of variables controlled by a user which can provide an unpredicted input into the system causing certain operations or states to happen, and when they are of reasonable complexity they seem to be able to validate ideas or concepts we could not otherwise understand because of their complexity. So in the same way Turing’s machine tried to answer Entscheidungsproblem by building up from a simple series or logical operations, modern computer programs try to answer complex geometrical and logical problems by building up from a series or simple logical operations.

Question 2 Geometrical Aspects of Cellular Automata?

The ability of cellular automata to produce logical understandable patterns, as well as ones of apparent randomness demonstrates that both can be produced from the same simple system. This challenges the idea that randomness can not be logically produced or created, moving the term “random” from something that denotes a process as well as a formation or geometry into a term which can only articulate a product like geometry. With the ends removed logically from its origin, geometry now illustrates something more complicated than a mere representation of logic.

turing automata

1. There seems to be an analogy to Turing’s machine on a very abstract scale found in evolution. Circular machines reach a finite point, or a wall at which there is no possible next move. Similarly, however, over a longer period of time and perhaps without such concrete results evolution renders a ‘circular’ species extinct. The vast majority of species are ‘circle-free’. The ‘circle-free’ species are influenced by the immediately (relative time wise) preceding configuration. For example, since humans no longer have a rough diet of tough to digest plant material, humans no longer need the appendix to aid in our digestion. The ‘0’ or blank space in our code has been erased and our code has been transformed into one that is more compact and efficient.

2. Cellular automata have no geometrical aspects. They can be assigned to run on any type of geometry with the same sets of rules.

Friday, February 8, 2008

Week 3: Models of meaning continued

In terms of our discussions we want to recognize Lynn's as well as Balmond's, van Berkel's, and Eisenman's contrast of topology with geometry, Cartesianism, and stasis. The question is what is the model of meaning given to us through topology? Well there are a number of things, but one of the most critical is that it shifts the ontology of archtiecture from a thing to an event. Look at the essays again and note the terms they use in relation to topology.

Impossible to Avoid Geometry?

We should ask: what are some of the basic features, basic operations, that are internal to architecture?

By which I mean, when we are designing, are there specific things that we can’t avoid? – like drawing lines.

I would like to make a very simple proposition which is this: we can’t seem to avoid geometry. Now this might mean different things in different contexts. And so maybe there isn’t an ultimate foundation from which we could say, e.g., “This is how geometry is essential to architecture.” But at the very least, when we do something in architecture, that something seems to imply or involve geometry.

Geometry Lacking Space

(Let’s not get into this, just yet: Descartes development of an algebraic system for Geometry – geometry with symbols rather than figures. Now space has a metric, discrete and continuous, the grid comes later, but the coordinate system is the basis – whereas in Euclid, it is just continuous, metric, but continuous. Somewhere Panofsky writes about the problem of the infinite in the development of perspective)

By having you look at Euclid’s definitions, postulates, and common notions, I wasn’t considering that this would give us a foundation for geometry for architecture but rather, in its economy, we could look at the way in which geometry is defined from within mathematics. And so, when we consider the elements we are considering how they relate one to the other and so on and further that when we read them in a particular order they seem to be defined from the most simple elements to the most complex – that is, point, line, and plane, to metric relations between them, to figures and metric properties of those basic figures.

But notice that in the 23rd definition, Euclid seems to be saying something-- for the first time-- about geometry’s relation to space. One could say that he is already talking about space in the previous definitions. I’m not so sure. For one just because he is talking about the metric properties of figures, or the relationship between points and lines and planes, it doesn’t necessarily follow that he is pointing to geometry’s relation to space in general. Rather, it seems that whatever one constructs as ‘geometrical’ has these properties – for good. But in the 23rd definition, it seems as now those properties might also hold good in some fashion for something beyond the geometrical figure or element as such. By which I mean this: up until the 23rd definition we have something that counts as a general property for a geometrical thing – but it hasn’t yet been said what the space is around that thing only what that thing is as such. In other words: does geometry as such say anything about the nature of space (as opposed to geometrical figures)? If it does, it is ambiguous – the parallel definition simply says not what space is, but one of the conditions of space that holds good, for good. If two lines are intersected by a third and the interior angles are the same, then those two lines will never meet.

The fifth postulate, by contrast, is a demonstration that if those interior angles are not equal, those lines will meet on the side where the interior angle is less than 90.

[If you want to know something more about the history of his problem, see Bernard Cache, “A Plea for Euclid” on the server. The essay, among other things, is a critique of architect’s indiscriminant discussion of topology and digital design]

One of the points of this discussion is this: it is possible to talk about consistency in regards to geometrical propositions qua geometrical things, but not so easy at least to talk about space. Another point is to clarify for ourselves what we mean when we say that architecture is in some capacity always tied to geometrical propositions. That is maybe a more difficult discussion. In either case, let’s at least assume that without geometry, it is difficult to perform an architectural operation. But let’s also admit that an architectural proposition is not necessarily a geometrical one, and vice versa. The last point is that insofar as architecture needs geometry, then in some sense architecture is architecture insofar as it needs geometry. Without it, perhaps, it isn’t architecture. Perhaps.

Architecture, we might say, is always bound up with problems of spatial relations and it seems, or did seem for some time, that geometry was the only field of mathematics that seemed capable of providing general laws for the features of spatial organization (Descarte’s invention is still a continuation of geometry).

Thinking about space and continuity in other terms: Topology

Another field that became relevant recently was topology (for a history of topology see http://www-history.mcs.st-and.ac.uk/HistTopics/Topology_in_mathematics.html) . There are early indirect examples of this: Christopher Alexander used topological diagrams for his discussion of pattern languages. Hannes Meyer introduced a reconfiguration of topological urban space. Le Corbusier used a topological system to hybridize infrastructure (a sidewalk) and architecture in the Carpenter Center at Harvard. Baudrillard discussed the Centre Georges Pompidou by Foster and Piano as a topological system. Frederick Kiesler used a topological system in his Endless House, which he also discussed in his book Endless Space. (http://www.surrealismcentre.ac.uk/images/KIESLER.jpg and http://en.wikipedia.org/wiki/Frederick_Kiesler).

More recently, however, topology became a special field of interest especially during the 90s with the development of digital design (which, in general, really changed the formal domain of geometrical thinking in architecture through a new kind of plasticity, some of which was tied to animation techniques, others of which were related to the utilization of advanced geometrical control with nurbs curves and surfaces – Chu has I think correctly identified this as morpho-dynamism). Peter Eisenman, among others, invoked topology as a new way of thinking about spatial relations, and this is what should interest us (see for instance his essay on Rebstock on the server—topology is invoked indirectly through the reference to Rene Thom’s catastrophe theory, which is a combination of calculus and topology, the same way Descarte’s coordinate system is a combination of algebra and geometry). Jeff Kipnis suggested that topology was a way of removing the ideality of geometry – by which he meant, it was a means for rethinking architecture away from the traditional figure/ground relation. This is also what Eisenman had in mind and he and Gregg Lynn pioneered a new attitude toward space and geometry by introducing, thanks to John Rajchman, Gilles Deleuze’s discussion of the fold in A Thousand Plateaus.

Let’s now point out a few things about topology. First, topology is a distinct field of mathematics that emerged in the 18th century under the work of Leonard Euler. He produced at least two major insights into problems of spatial relation that significantly departed from geometrical concepts or spatial relation (for a history of this see: http://www-history.mcs.st-and.ac.uk/HistTopics/Topology_in_mathematics.html)

Definitions: topology “ignores individual differences among, say, figures bounded by closed curves , and treats them as a group that have certain invariants in common.” Barr 151. One way to get around this vagueness is to understand that topology is not as concerned with the specifics of a figure, but rather a mathematical logic that will relate an entire set of figures – and here the key word is set. “topology aims at the invariant in things; the the things have to be referred to somehow, if very genrally an, and the best way to referto things in this way, and yet retain the kidn of relationship that exist with topolotical invariants, is by treating them in groups, or sets. 163 Barr

(Important to note – see also the grammatical background to this issue in Euler who developed the formula for topological invariant given any polyhedron: Imre Lakatosh in Proofs and Refutations notes in a footnote that Euler made this discovery when he changed the terms that defined polyhedral from vertices lines, and faces to vertices, edges (acis), and faces.)

Topology as a different way of discussing space

“As a rule topologists confine the use of the word “continuous to processes, rather than spaces – a line is a 1-dimensional space – but if we want to use it for a line, then continuity relates the set of all the points on the line to the set of all the real numbers.” Barr 152.